Digital audio explained with stacks of paper

Different aspects of Digital Audio explained and demo’ed by using stacks of paper. A useful analogy to make it tangible and which can increase your productivity.

In this article, I explain storing and streaming Digital Audio and how to manage the data. I will make Digital Audio tangible and demonstrate and explain the file sizes with the visual metaphor of stacks of paper.

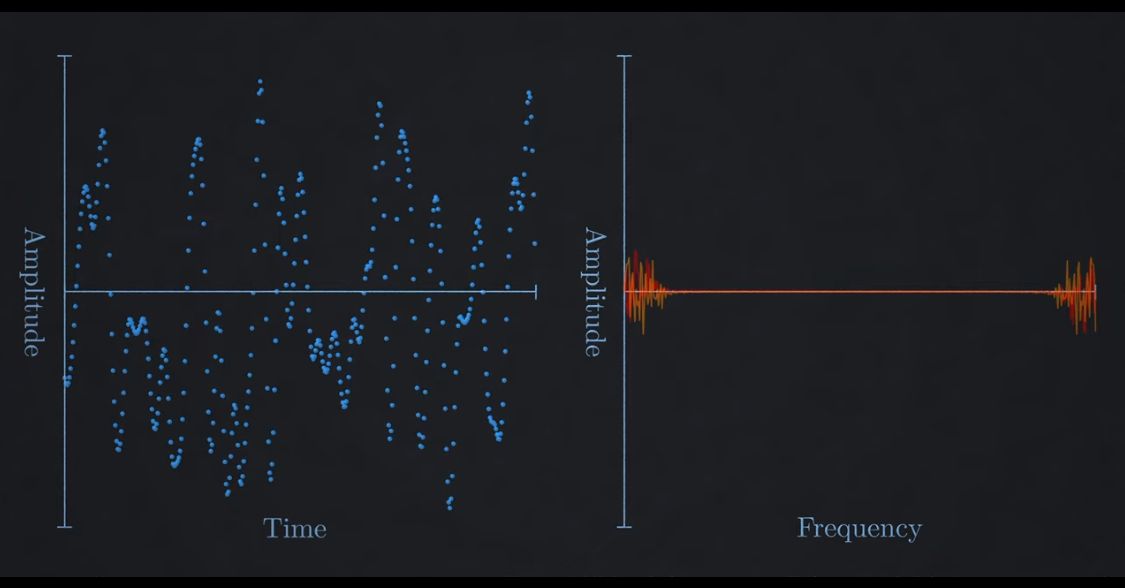

Samples and bit depth

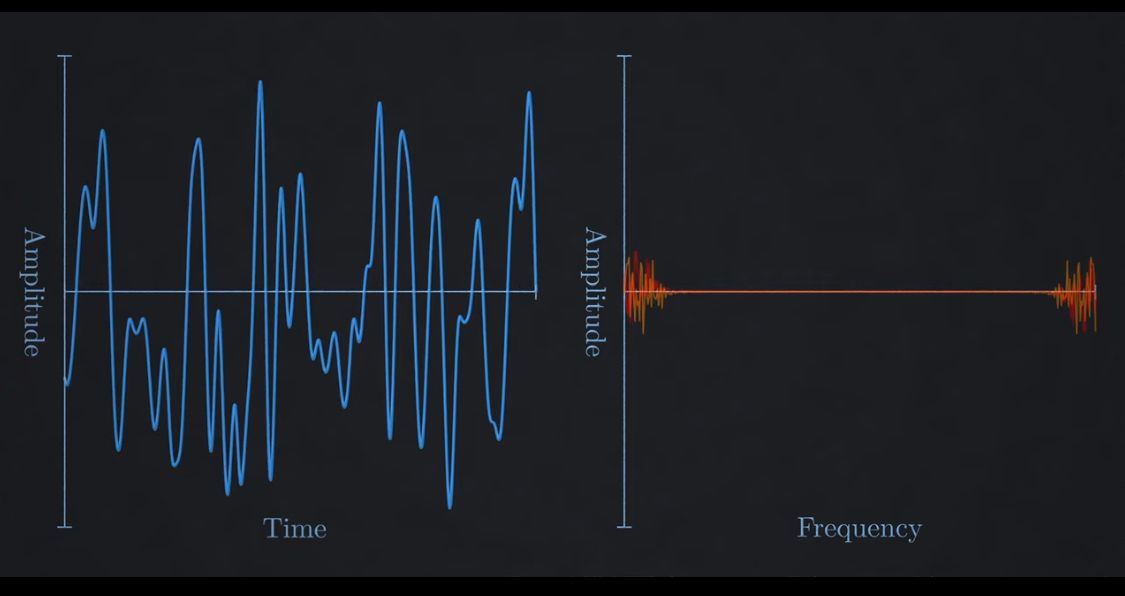

A good start is sample. A sample is an atomic slice of the audio file, a data point of the sound wave.

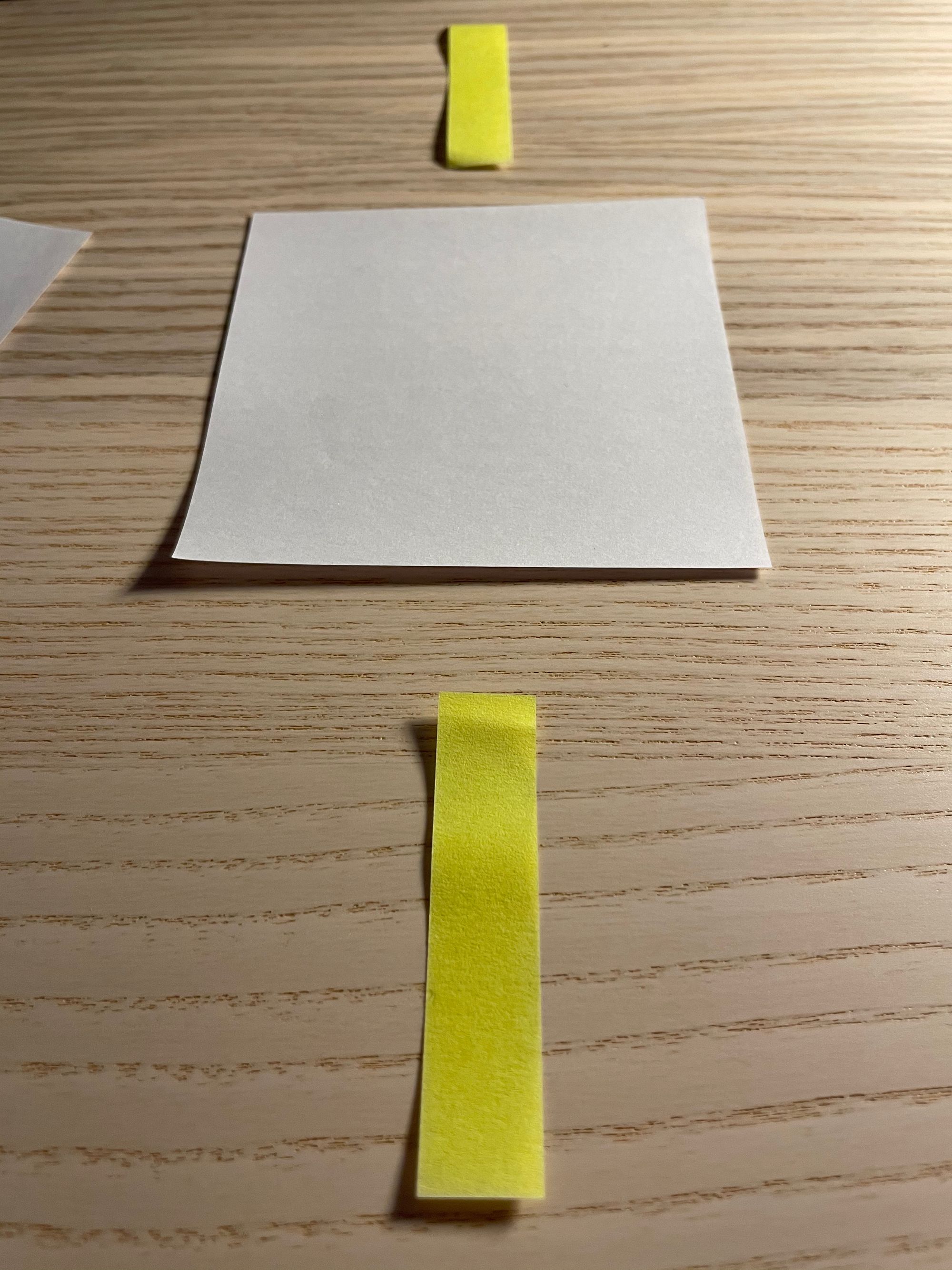

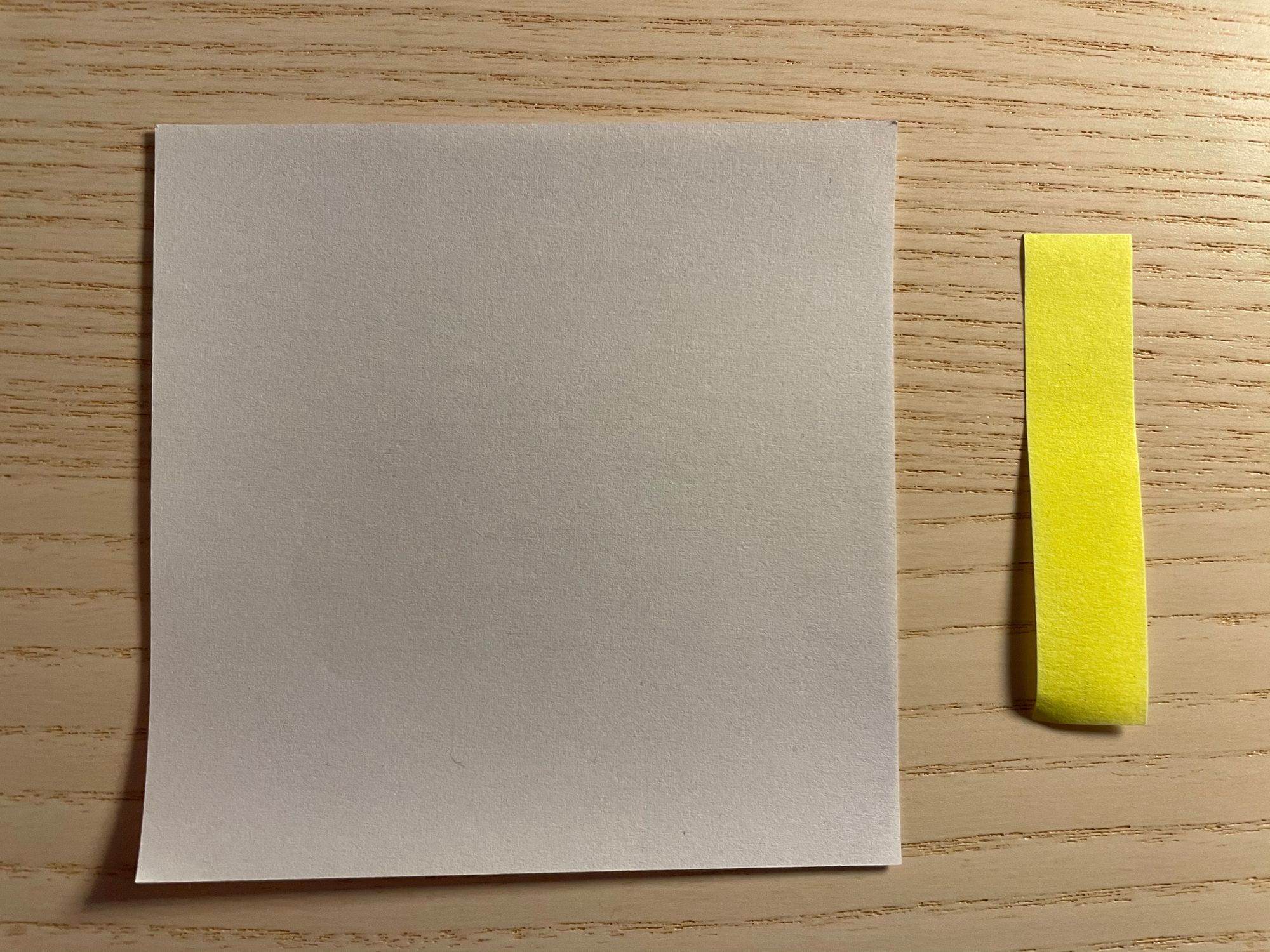

In our comparison with the paper it is like a single sheet of paper from the stack.

With the single sample, bit depth comes in. This is how much information is contained on the sheet. So the white paper on the left will contain more information than the small yellow one. The white one represents a high bit depth and the yellow one a lower bit depth.

For music, the bit depths you will come across are 16 bit and 24 bit. For voice, like on phone systems, you will see 8 bits. While the sample rate is concerned with capturing frequency accurately, bit depth is related to dynamic range.

Dynamic range is the distance between the quietest and loudest sounds in a piece of music, and the quality of the resolution within this range.

For many years, 16 bit was the standard, the depth used on CD's. While 16 bit is still very common, 24 bit is now becoming more widely used for Hi-Res (HD) audio. Consumers can now purchase music in lossless formats that support higher sample rates and bit depths.

The below illustration shows this concept in detail:

Sample rate

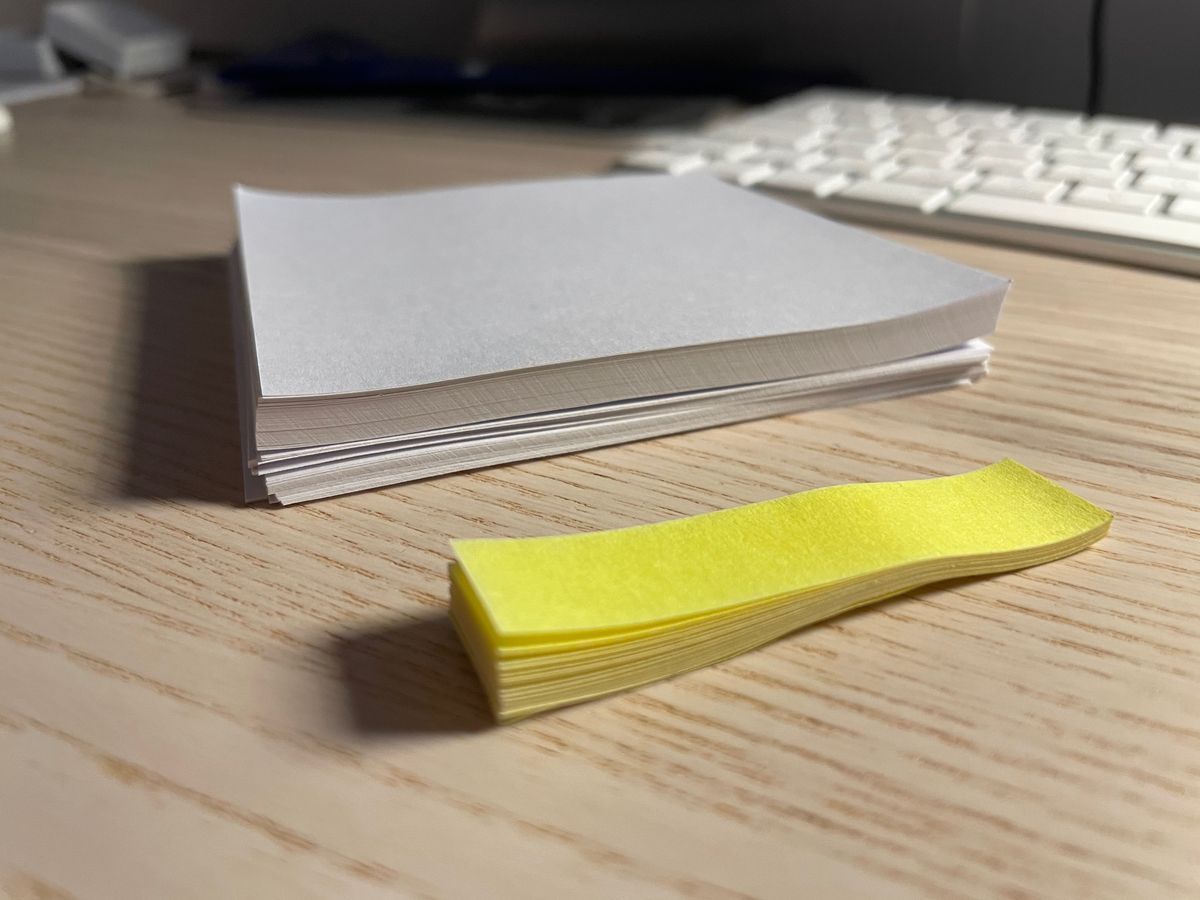

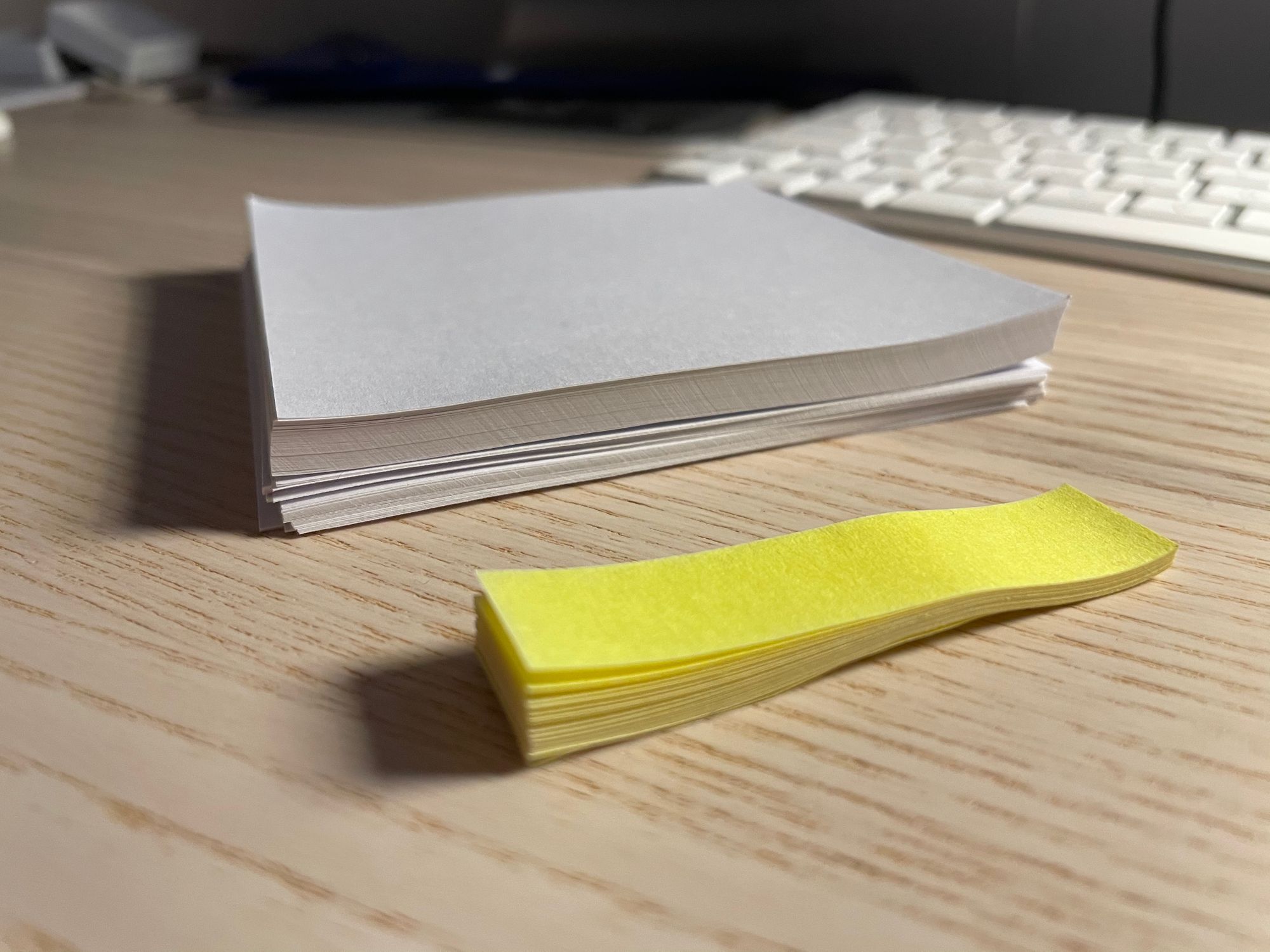

In order to produce sound, we now need to add time to the equation. The faster you move through the stack the more fidelity you get.

Sample rate en bit rate

How many samples of data are taken per second? This is normally measured in hertz, eg an audio file usually uses samples of 44.1 kHz (44,100 audio samples per second).

A single voice signal on the phone occupies 8 kHz of bandwidth. Each sample is quantized into 8 bits, yielding a rate of 64 kbps, which is used universally on phone systems.

Bitrate

When audio streaming became the norm, bitrate was an important measurement. How much data is going to be needed to provide the right quality of sound. Are we moving slowly or quickly through the stack, and a how big are the sheets?

We use bitrate to describe the fidelity of audio files. An MP3 file that was compressed at 320kbps, will have a much better dynamic range and sound quality as one compressed at 128kbps. Or, more information can be contained on a bigger sheet of paper than on the smaller ones.

Bitrate is also the measure of the rate at which data is transferred from one point to another in time. Think of it as the volume of the pile of paper that needs to be downloaded.

With higher bitrate, audio files with higher bit depth and sample rate can be streamed, thus increasing the quality of the audio. However, this means an increase in bandwidth used for transmission.

Bitrate formula

You can see that sample rate and bitrate are related, but not the same. Here is the formula for it:

Bitrate formula = Sample rate x Bit-depth x Number of Channels

A typical, uncompressed high-quality audio file has a sample rate of 44,100 samples per second, a bit depth of 16 bits per sample and 2 channels of stereo audio. The bit rate for this file would be:

44,100 samples per second × 16 bits per sample × 2 channels = 1,411,200 bits per second (or 1,411.2 kbps)

Variable bitrate

Bit depth is fixed for unencoded streams, but with lossy compression codecs (like MP3 and AAC) it is calculated during encoding and can vary from sample to sample. More on encoding in this article.