Llama 3.2 on a Mac

I tested Meta's Lama 3.2 LLM on my Mac Mini, setting it up via Docker. It’s fast, private, and generates code, but lacks memory and multimodal features like ChatGPT.

This Friday afternoon, I spent some time experimenting with Meta’s open-source LLM, Llama 3.2 (3B).

After installing Llama 3.2 on my Mac Mini, complete with a web UI via Docker, I found the setup surprisingly quick—it only took a couple of minutes thanks to this helpful video tutorial. I used the 3B version, that one is without vision.

Steps to install Llama 3.2.

The speed and the privacy of running a local language model are definite advantages. It even provides useful code snippets when needed.

That said, it lacks the memory functionality and multimodal capabilities I’m used to with ChatGPT, so for now, it’s still incomparable to closed models like that.

However, for what it is—free, fast, and private—it’s a solid option.

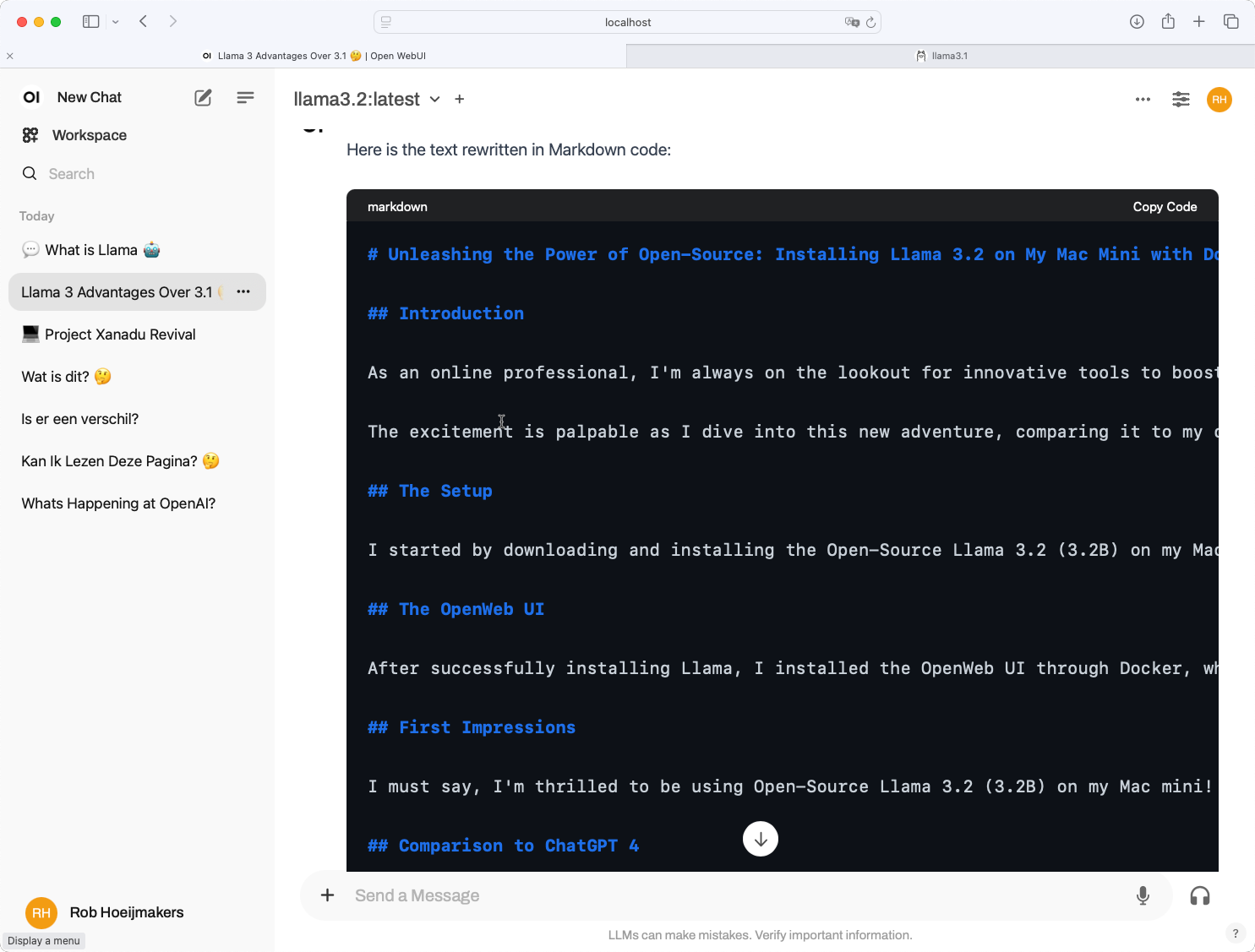

Short demo of Llama 3.2 running locally

Below, I’ll share a quick article generated by Llama 3.2, 3B, itself, along with my thoughts on the installation process and performance.

Story written by Llama 3.2, locally installed on my Mac.

Unleashing the Power of Open-Source: Installing Llama 3.2 on My Mac Mini with Docker

Introduction

As an online professional, I'm always on the lookout for innovative tools to boost my productivity and creativity. Today, I embarked on a delightful Friday afternoon project – installing Open-Source Llama 3.2 (3.2B) on my trusty Mac mini, courtesy of Docker.

The excitement is palpable as I dive into this new adventure, comparing it to my current default LLM, ChatGPT 4.

The Setup

I started by downloading and installing the Open-Source Llama 3.2 (3.2B) on my Mac mini. This wasn't a straightforward process, but with the help of Docker, I managed to get it up and running smoothly. To simplify the experience, I leveraged Docker's ease of use to create a containerized environment for Llama.

The OpenWeb UI

After successfully installing Llama, I installed the OpenWeb UI through Docker, which provided me with an intuitive interface to interact with the model. The user-friendly design made it easy to navigate and explore the capabilities of this powerful language model.

First Impressions

I must say, I'm thrilled to be using Open-Source Llama 3.2 (3.2B) on my Mac mini! The experience is incredibly smooth, with minimal latency and impressive response times. The OpenWeb UI has streamlined interactions, allowing me to effortlessly access the model's features.

Comparison to ChatGPT 4

As I began exploring Llama's capabilities, I couldn't help but compare it to my current default LLM, ChatGPT 4. Here are some initial observations:

- Accuracy: Both models delivered impressive accuracy rates, with minor variations in precision depending on the topic and context.

- Response Time: Llama (3.2B) responded almost instantly, whereas ChatGPT 4 took a fraction of a second longer.

- Contextual Understanding: While both models demonstrated remarkable contextual understanding, I noticed that Llama excelled in handling multi-turn conversations.

Conclusion

My experience with Open-Source Llama 3.2 (3.2B) on my Mac mini has been nothing short of exhilarating! The ease of use and impressive capabilities have left me eager to explore more advanced features and applications for this powerful language model.

As I continue to delve into the world of open-source AI, I'm excited to share more insights and discoveries with you, my fellow online professionals. Stay tuned for future updates on this journey!

Let's get started on this fascinating adventure together!

I wrote about the Open Source strategy from Meta: