How AI is Transforming Podcast Production: A Personal Take

Transforming podcast production: AI helps turn hours of audio into cohesive stories, blending automation with the human touch to bring ideas to life.

Imagine you no longer need to painstakingly write minutes or take notes during a meeting. Instead, every word is automatically captured, and key points are summarized for you.

That’s part of my workflow now, thanks to AI, and it’s also a major transformation happening in podcast production. Recently, I had a conversation with Marvin Jacobs from Airborne where we discussed exactly how AI tools are reshaping this space.

From Chaos to Story: The Workflow

Let’s start with how a typical podcast episode comes to life.

The process begins with collecting audio material—interviews, sound bites, and ambient recordings bases on an outline. Often, as Marvin explained, they’ll record interviews that may last up to two hours, with multiple guests like researchers or experts, depending on the theme of the episode. Imagine you have hours of content from three different speakers, and your goal is to condense all of that into a cohesive half-hour episode. This is where AI steps in.

Once all the raw audio is recorded, it’s transcribed using tools like Whisper or AmberScript. These AI transcription services convert the entire conversation into text, complete with time codes. While these tools make it easy to refine and improve the transcript—since errors are often present—the underlying audio remains unchanged. Marvin put it nicely: “It’s actually a text editor that syncs with the audio.”

From there, the team refines the outline into a more detailed script, which forms the backbone of the final product, defining the structure and flow of the episode.

For true text-based audio editing, tools like Descript are used, where changes to the transcript directly alter the audio, offering a seamless way to refine both text and sound. This makes it easy to decide which parts of a conversation to keep, which to cut, and how to refine the material—all while having the option to listen back to the exact point in the audio to ensure nothing important is lost. Airborne employs this method periodically. I've also experimented with Detail.io, which offers a similar text-driven approach to video editing, making complex edits more intuitive and efficient.

Extractive vs. Abstractive Summaries

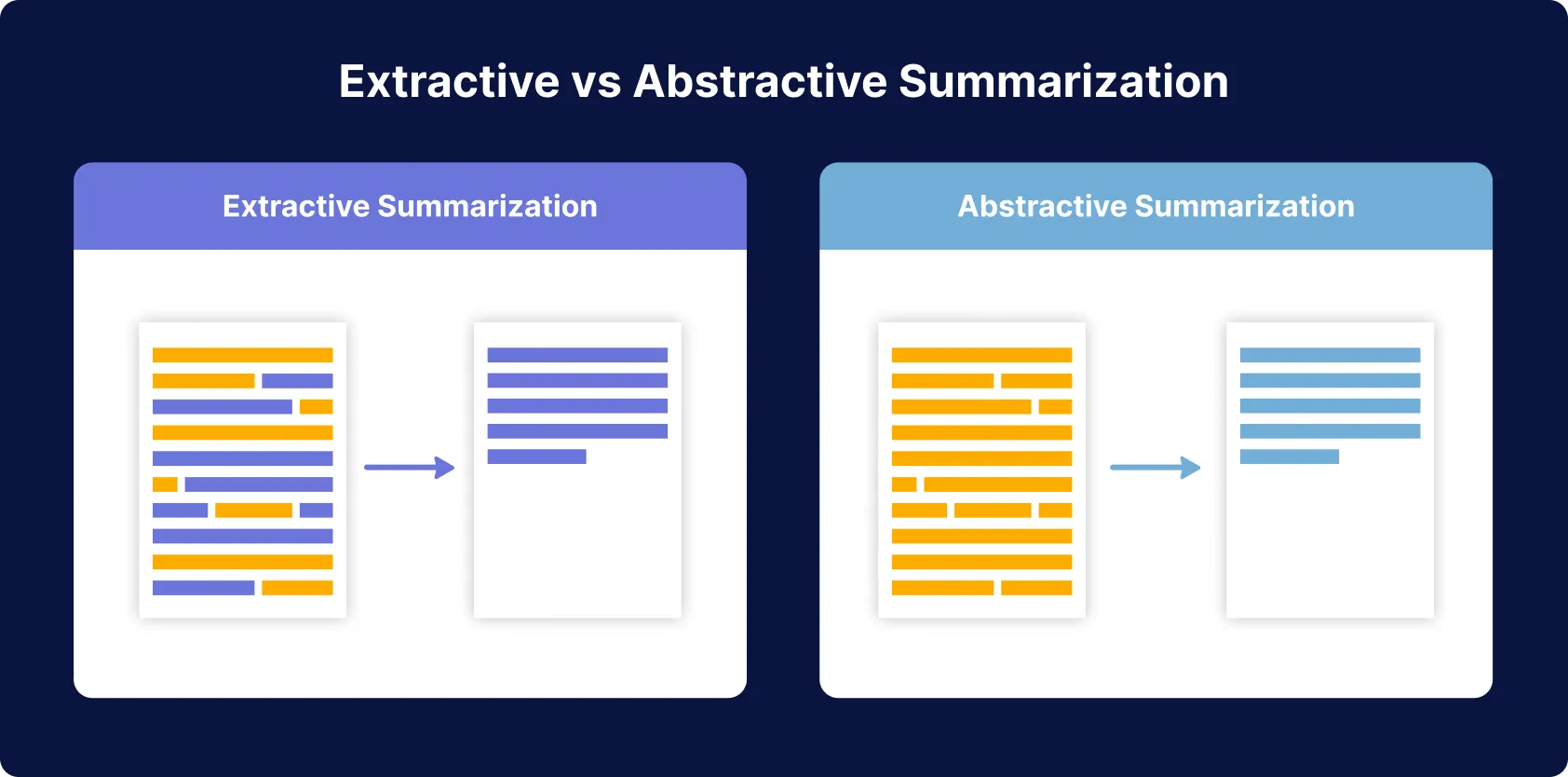

During our chat, we touched on something that’s fundamental to working with AI in creative processes—the difference between extractive and abstractive summaries.

I explained it to Marvin like this: An extractive summary is like what we learned in primary school, where you highlight what’s important—essentially a quote pulled from the material. Meanwhile, an abstractive summary is about rephrasing and synthesising meaning, capturing the core ideas and transforming them into something new.

Marvin and his team use AI for both. They create extractive summaries to get a shortlist of key points, almost like a puzzle piece collection, which then allows them to decide on a narrative. But the real creative part comes when they start working on the abstractive summaries. They ask, “What’s the story we’re trying to tell?” and “What’s the message for our audience?”

AI helps to pull out highlights, but it’s the human touch that brings the real meaning and flow to the podcast.

Adding the Personal Touch: Voice and Emotion

What stood out for me during our discussion was how even in a highly tech-driven workflow, the human element remains irreplaceable. For example, Marvin mentioned the importance of having a recognisable voice tied to the podcast: “We usually choose hosts who speak to the imagination, someone with a genuine connection to the topic,” he said.

AI might help speed up production, but there’s still something fundamentally intimate about knowing who you’re listening to.

It’s not just about summarising and editing. AI is also used for other parts of production—like generating show notes or even enhancing sound effects and creating an immersive atmosphere.

Marvin gave an example of creating podcasts for KLM where they used sound design to enrich their storytelling, evoking the sound of Buenos Aires or the crunching snow of Alaska. Although this project predated AI, it illustrates the value of immersive sound design.

In more recent projects, like 'Dieren laten van zich horen' for Albert Heijn, they used extensive sound effects (SFX) to create a vivid experience. Today, tools like Wondercraft.ai can even generate SFX based on prompts, making it easier and faster to add those layers of immersion.

AI as a Creative Collaborator

My favourite insight from the conversation was this: AI is not about replacing the human role in podcasting; it’s about enhancing it. I often walk through Amsterdam, earbuds in, listening to transcripts read aloud while crafting my blog posts.

AI gives me the freedom to create without being tied to a desk. Similarly, for Marvin and his team, AI takes over the tedious parts of production, letting them focus on the creative work—like turning abstractive insights into compelling narratives.

Marvin and I both agreed that the true magic lies in balancing the efficiency AI offers with the uniquely human capacity for creativity and connection. It’s about working smarter and having more fun while doing it.

---

This is a glimpse into how AI is being used behind the scenes in podcast production today. If you’re a digital professional interested in using these kinds of tools, think of AI as your co-pilot. It can do the heavy lifting, so you can focus on what you love most—telling stories that matter. Follow me for future updates.