From Typing to Talking

From speech synthesis to dictation, I explore how evolving AI tools make communication swifter and more natural. Dive into my journey through spoken and written digital modes.

For as long as I’ve been fascinated by the digital realm, the intersection of language and technology has intrigued me. The digital world thrives on words—whether spoken or written. Yet, speaking predates writing by millennia, shaping human interaction long before the written word took hold. This led me to explore how information is structured, especially as we see a resurgence of oral culture with advancements in AI technologies like speech-to-text (StT) and text-to-speech (TtS).

In my exploration, I’ve found that while text remains compact, easier to store, and more searchable, spoken language carries nuances—intonation, emotion, and even the subtleties of body language—that text often loses. This shift back towards an oral mode of communication, facilitated by AI, intrigued me, especially since it blurs the lines between speaking and writing.

The Return to Oral Culture Through AI

With tools like StT and TtS, we’re witnessing a partial return to an oral culture in our digital spaces. I wanted to understand how I could seamlessly switch between the two modes—speech and text.

This investigation turned into a fascinating journey. I’ve come to realise that dictation, while faster than typing, works best when you have a clear idea of what you want to say. If your thoughts are more fluid or exploratory, recording and then transcribing might still be the better approach.

The Tools Behind My Workflow

I’ve experimented with various tools to find what works best for my needs:

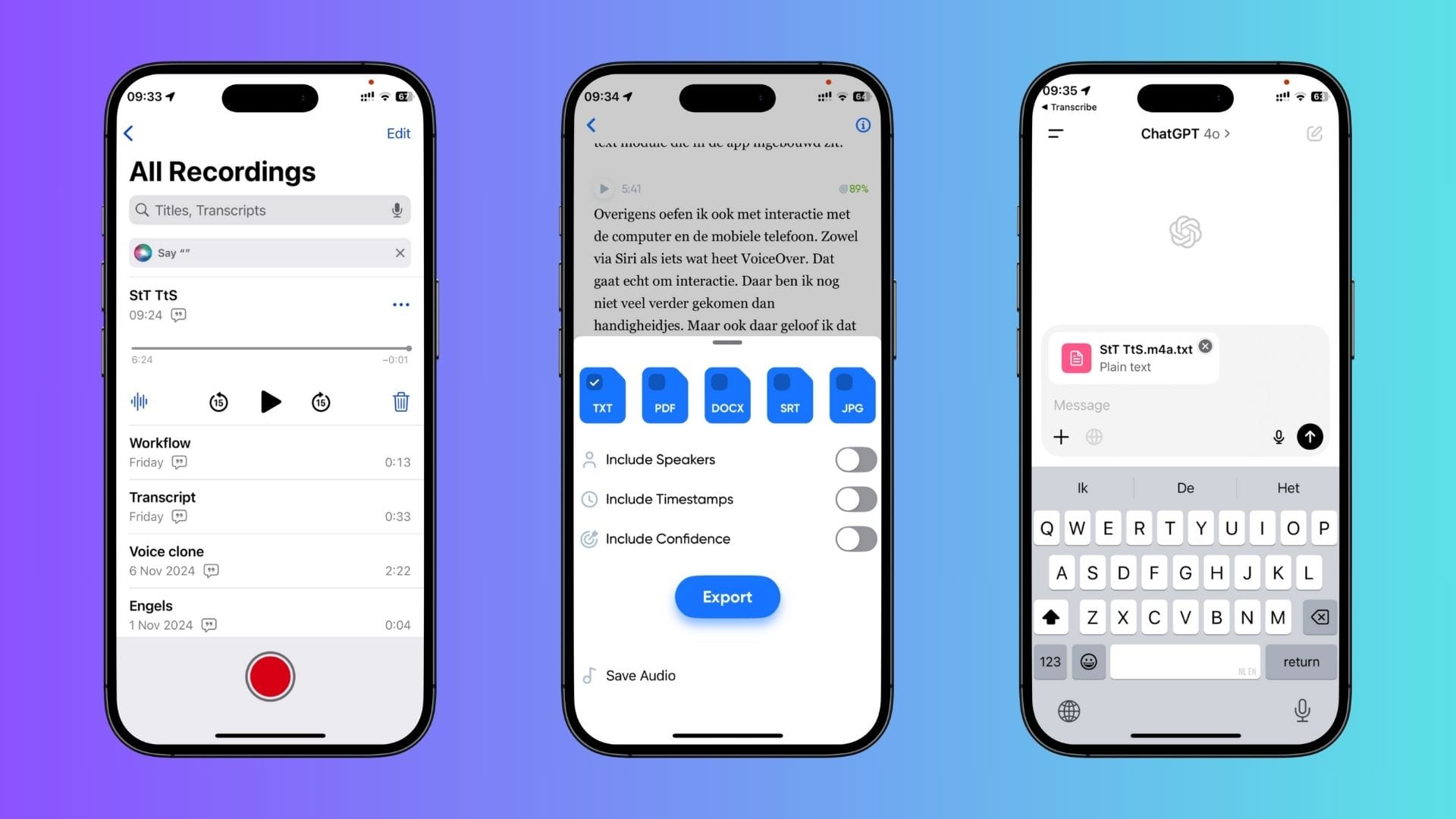

- Transcription: I use three methods for transcription: local, cloud-based, and for video calls. Locally, I use OpenAI’s Whisper, which keeps everything on my device, ensuring privacy and control over my data. For cloud-based transcription, I use an application called Transcribe, which is cross-device and offers flexibility in accessing my transcriptions wherever I need them. For video calls, I use Fireflies, which automatically records and transcribes my calls, including speaker tracking—a feature that differentiates who said what. This combination has been incredibly useful for projects requiring detailed dialogue analysis.

- Speech Synthesis: Beyond transcription, I’ve delved into the world of speech synthesis. I even created a clone of my voice, allowing me to generate spoken content that feels more personal and connected to my style. This is particularly helpful for projects where I need to update content regularly. For instance, in screencast videos, where the visuals change frequently, using TtS to sync updated narration with new footage is much more efficient than re-recording everything.

Dictation: Efficiency and Built-In Features

One of the biggest revelations for me was the sheer efficiency of dictation. I’ve found that dictation is at least three times faster than typing, especially for longer texts. While typing can help with concentration, dictation feels more natural and fluid when capturing initial thoughts.

Although dictation has limitations—like not being able to work in complete silence—it benefits from improved local computing power, which also makes it more private. Ironically, typing on a phone, despite being tactile, often leads to physical strain, whereas dictation is more comfortable and efficient in many situations.

I’ve also embraced the convenience of OS-level transcription features on iOS and macOS. Having StT functionality integrated directly into the system means I can access it anywhere, whether I’m working on my laptop or my phone. This kind of consistency is a game-changer. Although I continue to experiment with platforms like ChatGPT’s transcription capabilities, I appreciate the flexibility of being able to dictate wherever inspiration strikes.

Navigating Voice Commands and Interaction

Beyond transcription and synthesis, I’ve been exploring voice commands on both desktop and mobile devices, using tools like Siri and VoiceOver. These experiments are still in their early stages, but I’m convinced that as AI continues to evolve, the way we interact with our devices will shift dramatically.

We’re moving away from traditional Graphical User Interfaces (GUIs) towards more natural, voice-driven Application Programming Interfaces (APIs). I plan to delve deeper into this in future posts.

A New Way of Working

Ultimately, my journey into StT and TtS has taught me that these technologies aren’t just about efficiency; they represent a new way of working, one that’s more in tune with the natural flow of human communication. By blending spoken and written modalities, we can communicate more effectively, streamline our workflows, and adapt to the evolving digital landscape.

I’m still learning, still practising, but I see a future where speech and text coexist seamlessly, allowing us to focus less on the tools themselves and more on the messages we want to share.